🧹 How to Drop a Spark Delta Table and Clean Up Associated Files in Databricks

When working with Delta Lake tables in Databricks, it’s not enough to simply drop the table from the metastore—you also need to ensure that the underlying data files are removed to prevent clutter and maintain a clean data lake. This process is especially important when dealing with external Delta tables, where Spark does not automatically manage file deletion.

The following steps outline a reliable method to fully remove a Delta table and its associated files using Spark SQL and command-line tools.

🔹 Step 1: Identify the Schema and Table

Begin by locating the schema and table you want to delete. Replace placeholder values like schemaName and tableName with the actual names used in your environment. This ensures you’re targeting the correct table throughout the process.

🔹 Step 2: Inspect the Table Metadata

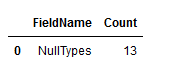

Using Spark SQL within Databricks, run a query to describe the table. This will return detailed metadata, including the location of the table’s data files in DBFS (Databricks File System). If you’re using the default schema, it may be named default, but adjust as needed.

🔹 Step 3: Locate the Storage Path

In the metadata output, scroll down to find the Location field. This value points to the directory where the table’s data files are stored. Copy this path—it will be used later to manually delete the files if necessary.

🔹 Step 4: Drop the Table from the Metastore

Execute a Spark SQL command to drop the table. This removes the table’s metadata from the catalog. If the table is managed, this step may also delete the associated files. However, for external tables, the files will remain and must be deleted manually.

🔹 Step 5: Delete the Data Files from DBFS

Using your preferred method of interacting with DBFS—whether through the command line, a Python script, or a Databricks notebook—delete the directory identified earlier. This ensures that all data files associated with the table are removed from storage.

✅ Why This Matters

Delta tables support ACID transactions and maintain a transaction log. Improper deletion—such as manually removing files without dropping the table—can corrupt the log and lead to inconsistent behavior. By following this structured approach, you ensure both the metadata and physical files are properly cleaned up.

This method is especially useful when:

- Decommissioning obsolete datasets

- Resetting environments for testing

- Automating cleanup in CI/CD pipelines

Let me know if you’d like help turning this into a reusable script or integrating it with your workflow.

#Step 1

#Find and replace schemaName

#Find and replace tableName

#Step 2

#Find the table

#Via Databricks run the Spark SQL query below

#default is schema, change as needed

DESC FORMATTED schemaName.tableName;

#Step 3

#From the table returned scroll down to "location" and copy the field value

#Find and replace locationFieldValue

#Step 5

#Via Databricks using Spark SQL drop the table

DROP TABLE tableName

#Step 6

#Find and replace locationFieldValue

#By the means you use to interact with Databricks File System (dbfs), e.g. cmd python virtual environment

#Run command below

dbfs rm -r "locationFieldValue"